Chapter Six: The History of Electronic and Computer Music

1. Electronic Music Historical Timeline

See also a A Brief History of Sound Synthesis and Simon Crab's amazing 120 Years of Electronic Music for more information about earlier instruments and experiments.

1902

Thaddeus Cahill sets up the Telharmonium or Dynamophone , a 200-ton array of Edison dynamos that produced different pitched hums according to the speed of the dynamos. The electrical output was "broadcast" over telephone lines to subscribing hotels and audiences in Telharmonic Hall. While the instrument was not ultimately a commercial success, it did inspire the thinking of Ferruccio Busoni, who himself would go on to influence the thinking of Varèse and others.

1906

Lee DeForest invents and patents the Triode Vacuum Tube (called appropriately the Audion) which led to amplification of electrical signals by 1912 and tube oscillator circuits several years after that, opening the door for an explosion of electronic instruments in the 1920's.

1907

Ferruccio Busoni publishes Sketch for a New Aesthetic of Music (Entwurf einer neue Ästhetike der Tonkunst ) discussing the use of electrical and other new sound sources in future music. He was to have a profound effect on his pupil, Edgard Varèse, who met him in 1907. Varèse wrote toward the end of his life that reading Busoni's writings was "milestone in my musical development, and when I came upon 'Music is born free, and to win freedom is its destiny,' it was like hearing the echo of my thought." While Busoni heralded the use of electronics and microtonality, he never made use of either in his compositions.

19teens

Italian futurists investigate, classify, and produce noise instruments called intonarumori (pictured left with Russolo). Most notable was Luigi Russolo, who wrote a letter/treatise entitled L'arte dei Rumori or The Art of Noises, in which he embraced modernity's new world of sounds which the modern world had brought forth. They embraced the infinite variety of noise, old and new, including those created electronically and other mechanisms for use by future composers that broke out of the traditional orchestral palette. They classified these new sounds into six main noise categories (including the ever-popular "death rattles"). Marinetti met with Busoni in 1912, and Russolo and Marinetti give the first concert of Futurist music in 1914 in Milan using their intonarumori. Most of the instruments were destroyed in WWII bombing or lost, as no originals currently exist. The Futurists were one of the few artistic movements in history that gravitated towards Fascism.

DeForest and Edwin Armstrong separately develop audio tube oscillators in 1917.

1920's

Varèse (pictured left) writes Ionisation and George Antheil composes Ballet Mecanique: both use percussion and noise instruments in a unique, featured way (i.e. they are the stars, and not just supporting cast) and deal with the "liberation of sound" and a new view of musical "spatial-temporal" relationships. Varèse later said of his ideas, "I dream of instruments obedient to thought—and which, supported by a flowering of undreamed-of timbres, will lend themselves to any combination I choose to impose and will submit to the exigencies of my inner rhythm." A collection of his lectures on the liberation of sound can be downloaded here. Antheil's Ballet Mècanique was the score for a Dadaist/post-Cubist film of Fernand Léger (click link to see on YouTube, but worth searching for if link has moved).

Electronic instruments invented during this period include the Theremin (1919-20), Ondes-Martenot (1928), Trautonium (1928), and Hammond Organ (1929) based on technical principles of the Telharmonium. See 120years.net for an unbelievably complete description of these and many other early electronic instruments. In 1924, General Electric released moving-coil loudspeakers, a technology still in use today. Harry Nyquist develops digital sampling theory. Optical film soundtrack composing begins.

Messiaen wrote Fete des belles eaux (1937) for six ondes-martenot as well as featuring the instrument as soloist in Trois petites liturgies de la Presence Divine (1944) and Turangalila-symphonie (1946-8). Strauss, Hindemith and Varèse (two used originally in his Ecuatorial) composed for the Trautonium.

1930's

Improvement of amplifiers and invention of the tape recorder . Neo-Dadaist-inspired John Cage composes Imaginary Landscape no. 1 (1939) and no. 2 (1942) using test-tones from recordings, which were played on variable-speed turntables. Léon Theremin designs the Rhythmicon for composer Henry Cowell, which could generate 15 complex and syncopatable rhythms based on a transposable fundamental frequency. He wrote Concerto for Rhythmicon and Orchestra with it, and several other works, though it soon fell into disuse.

1940's

Egyptian-born composer Halim El-Dabh experiments with wire recorders in Cairo. In 1944, he produces The Expression of Zaar, using field sound recordings of a street festival and manipulating it using equipment such as reverb chambers at the Middle East Radio station. It is considered by many to be the first piece of musique concrète performed in concert. The piece was later abridged and released as Wire Recorder Piece. El-Dabh came the the US, worked at the Columbia-Princeton studios and is perhaps best known for his expansive Leiyla Visitations.

Free Music Machine (pictured left) invented, which generated sound via eight oscillators controlled in parameters of pitch, volume, completely free rhythm and timbre by freely sketching on a optically-read main roll of an 80"-wide graph paper, along with several auxiliary rolls. It was developed by composer Percy Grainger (who was also a student of Busoni) and Burnett Cross. It was perhaps a forerunner to the graphic-sound-control UPIC system Xenakis developed decades later.

1948

RTF (Radiodiffusion-Télévision Française, became ORTF (Office de RTF) in 1964, and later becoming GRM) broadcasts Pierre Schaeffer's Etude aux chemins de fer on Oct. 5th. This marks the beginning of studio realizations and for some (see El-Dabh above), musique concrète. Musique concrète is characterized as compositions whose materials originate as real-world, recorded sounds which are reorganized, sometimes processed via tape splicing, speed or direction change, looping, filtering, and so forth. Schaeffer conceptualized the original sounds as L'Objet Sonore, or the sonic object, a unit of composition plucked from its original context. The extremely deep aesthetic is explained by Schaeffer (pictured left) in his Treatise on Musical Objects, recently translated into English. Pierre Henry collaborates with Schaeffer on Symphonie pour un homme seul (1950), the first major work of musique concrète. In 1951, the studio was formally established as the Groupe de Recherche de Musique Concrète, which included other composers such as Messiaen, Boulez and Stockhausen.

1950

Studio established in Cologne (Köln), Germany by Herbert Eimert, Robert Beyer and Werner Meyer-Eppler (physicist) at German radio NWDR (Nordwestdeutscher Rundfunk, which later became WDR) . Karlheinz Stockhausen (pictured left) most influential. While the French RTF was primarily concerned with manipulation of acoustic sound sources (musique concrète), the NWDR studios were equipped with electronic sound generators and modifiers, and the creative product was referred to as electronische Musik, where the origins of the sounds were artificially created via oscillators, noise generators, etc..

Toru Takemitsu and other Japanese artists founded the artist's collective Jikken Kobo in 1951, which soon after collaborated with TTK (soon to be Sony Corporation) and used their new tape recorders to produce musique concrète, multimedia works and concerts of both Western avant garde and Japanese pieces.

1952

Four compositions for tape recorder, composed by Vladimir Ussachevsky and Otto Luening (pictured left), presented at the Museum of Modern Art, New York (on October 28) with works composed at the Columbia Tape Music Center, which they established the previous year, the forerunner of the Columbia-Princeton Electronic Music Center. Raymond Scott (extremely financially successful radio/tv/experimental composer and inventor) designs arguably the first electro-mechanical sequencer in 1953 which consisted of hundreds of switches controlling stepping relays, timing solenoids, tone circuits and 16 individual oscillators. Scott also invents Clavivox synthesizer with subassembly by Robert Moog (1956) with his Manhattan Research Company.

1953

Edgard Varèse receives Ampex tape recorder as gift and begins work on Déserts, for wind and percussion orchestra with tape interludes. Stockhausen completes Studie I, fashioned from pure sine tones organized in just intonation ratios (except for the "one-gun salute" to his daughter's first birthday). Famous guitarist, guitar designer and strong proponent of improved recording technology Les Paul commissions Ampex to build an eight-track tape recorder (pictured with Les Paul left), as he had been previously recording multiple tracks on individual synchronized acetate disks. He had pioneered the tape flanging effect the year before.

1955

Milan Studio de Fonologia RAI established, with Luciano Berio as artistic director. Toshiro Mayuzumi founds the NHK studio in Tokyo, modeled after the German NWDR facilities, where he composed three études in 1955 modeled after Stockhausen's. Phillips studio established at Eindhoven, Holland, but was shifted to University of Utrecht Institute of Sonology in 1960. Canadian composer Hugh Le Caine composes Dripsody, an oft-cited quintessential example of musique concrète, using a recording of a single drip of water into a waste basket as the only sound source. He created loops of the drip transposed by a keyboard connected to a varispeed tape recorder. Sadly Le Caine died in a motorcycle accident in 1977 at the age of 63.

1956-7

Lejaren Hiller and Leonard Isaacson compose the Illiac Suite for string quartet, the first complete work of computer-assisted composition or CAC (also called algorithmic composition), created using the ILLIAC I computer at the University of Illinois. Stockhausen composes Gesang der Jünglinge ("Song of the Youths"), the first major work of the WDR Cologne studio, based on text from the Book of Daniel. The thirteen-minute work combined an interplay of both concrète (boy's voice) and electronic means by matching timbres and phonemes, with statistical density principles of organization Stockhausen learned from courses with Werner Meyer-Eppler. Gesang originally sported 5-channel spatialization.

Max Mathews, working for Bell Labs, creates first circuitry to convert digital data into analog sound, resulting in the Music I synthesis language. It had one voice, no envelope, but pitch, duration and amplitude could be specified. It would lead to a 60-year string of developments of direct digital synthesis languages and programs, beginning with what are today referred to as the Music-N family of languages.

1958

Poème électronique played on over 400 loudspeakers at the Phillips Pavilion (pictured left, designed by famed architect Le Corbusier, whose assistant was Iannis Xenakis!) of the 1958 Brussels World Fair. Additionally, Concret PH, by Xenakis, was also played inside the pavilion. Attendees processed through the pavilion to view a film, slides and lighting on the walls and ceiling. The evolving sound-path was diffused up and down the textured, asbestos-coated interior, from three original mono tapes that were pre-panned onto three stereo tapes, with all being spatially controlled by both by a perforated control tape and sound projectionists using telephone-dial-style rotary controllers. A virtual Poème reconstruction can be viewed here.

1959

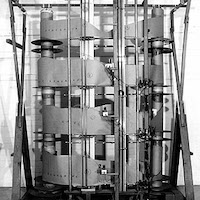

Columbia-Princeton Electronic Music Center was established in New York with the help of a $175,000 Rockefeller grant. It incorporated the room-sized RCA Mark II synthesizer (pictured left with Milton Babbitt), built in 1957 and designed by RCA engineers Harry Olson and Herbert Belar as a successor to their more limited RCA Mark I, with envelope generators developed by Robert Moog. The tube-based circuitry was controlled by a punch-tape rolls, visible in the picture, that automated many of the sound parameters and was more complimentary to the ultra-serial techniques of many of its users than tape splicing. The output was originally cut to a synchronized record lathe. It is often considered the first major voltage-controlled synthesizer. Composers using Victor as it was affectionately known, included Babbitt, Davidovsky, Luening, Ussachevsky, Wuorinen, Smiley, Druckman, Shields, Spiegel, Carlos and many more. Milton Babbitt produced Philomel (1964) and several other compositions on the machine.

1960's

Development of practical large mainframe computer synthesis. Max Mathews of Bell Labs (pictured left with his later Radio Drum interface) perfects MUSIC V, a direct digital synthesis language, by 1968. The father of computer music, as he is known, applied sampling theory to computer algorithms beginning in 1957 with Music I (mentioned above), much of which was written in assembly language for specific models of computers (to speed up the lengthy calculations). The program generated digital sample output from "orchestra" and "score" files, originally input with punch cards. The program's digital output was written to magnetic data tape that then had to be played through a DAC converter to produce audio. Many generations of ports and iterations are still being used today, for example, with Csound and Cmix, and the principles Mathews developed are in use by almost all digital audio applications. In short, he was a giant of a man, actively inventing and attending festivals until his death in 2011 at the age of 84.

Development of smaller voltage-controlled synthesizers by Moog and others make instruments available to most composers, universities and popular musicians. Most well-known use of the Moog modular was Switched-on Bach album by then Walter, now Wendy Carlos. Beginning of live electronic performance. Paul Ketoff developed the Synket, a live performance instrument used extensively by composer John Eaton in works such as Concert Piece for Synket and Orchestra (1967). London-based EMS released a VCS3 (known as the Putney) and later the legendary Synthi 100. Once Festivals, featuring multimedia theater music, were organized by Robert Ashley and Gordon Mumma in Ann Arbor, Michigan.

1962

San Francisco Tape Music Center (pictured left) established by Morton Subotnik and Ramon Sender, and soon joined by Pauline Oliveros (who served as director), incorporating a voltage-controlled synthesizer built by Donald Buchla based around automated sequencing, used in album-length Subotnik pieces such as Silver Apples of the Moon (1967) and The Wild Bull (1968).

James Tenney, composer and theoretician, completes the computer-synthesized Four Stochastic Studies at Bell Labs, composed the year after creating the now-classic Collage No.1 (Blue Suede), shredding Elvis's rendition the year before as a grad student at the University of Illinois. Tenney left Bell Labs in 1964 after developing his PLF composing program there.Also in 1962 Hubert Howe, Godfrey Winham and J. K. Randall, at Princeton University, not far from Bell Labs, improved upon Max Mathew's and Joan Miller's Music IV user interface and called it Music IV-B (later Music IV-BF when Howe rewrote in the non-assembler Fortran language). It ran on Princeton's mainframe, the same model IBM 7094 Bell Labs had, one of the first transistorized mainframes. When IBM changed over to the IBM 360 mainframe toward the end of the decade, Barry Vercoe, who was moving from Yale to MIT, updated the program to run on the 360 assembler language and appropriately called it Music 360.

1964-1968

Stockholm Elektronmusikstudion (EMS) is founded in 1964 along with Montreal's McGill University Electronic Music Studio (another EMS). Stockhausen composes Mikrophonie I for amplified and signal-processed tam-tam). In 1965, Robert Moog's R. A. Moog Co. releases its first commercial voltage-controlled modular analog synthesizer (pictured left), with design help from Herbert Deutsch. Unlike the tube-based RCA Mark II, Moog incorporated transistors into the circuitry.

John Chowning of Stanford CCRMA studios develops digital FM synthesis (anecdotally by accident while experimenting with extreme rates of vibrato), eventually leading to the commercial release of the FM-based Yamaha DX-7 synthesizer (see below). The royalties from the audio FM patent funds the Stanford CCRMA studios for many years to follow. Max Mathews and F. Richard Moore develop GROOVE, a real-time digital control system for analog synthesis, used extensively by composers Laurie Spiegel and Emmanuel Ghent in the 1970's. In 1966, Chowning, along with David Poole, write Music 10, a variant of Music IV designed to run on Stanford's PDP-10 computer.

In 1968, Mathews, Miller, Moore and Jean-Claude Risset complete Music V, perhaps the most famous direct digital synthesis program ever written (it was distributed to studios with two large boxes of punch cards!). The following year, Risset, still researching at Bell Labs composes Mutations using it.

1970's

Mini-Moog, a small affordable integrated synthesizer, makes analog synthesis easily available and affordable, along with newcomers ARP and Oberheim. Charles Dodge composes Speech Songs (1972) based on early Bell Labs speech synthesis research. Jon Appleton (with Jones and Alonso) invents the Dartmouth Digital Synthesizer, later to become the New England Digital Corp.'s Synclavier (1st version pictured left), making real-time digital synthesis a reality (you play a key, you hear a note). The Synclavier would go on to be not only a real-time performance keyboard, but also a major studio hard disc recording platform for those that could afford the pricey setup. The Fairlight CMI (Computer Music Instrument) was a cheaper real-time digital synthesizer and sampler with a digital sequencer and was released in 1979.

Princeton opens a computer music lab (primarily to convert digital tape to analog) in 1970 with Godfrey Winham and Kenneth Stieglitz so composers would not have to drive their digital tapes up to Bell Labs in MUrray Hill, NJ to be converted. It would eventually be renamed the Godfrey Winham Lab after Winham's early death. In 1975, with Chowning's guidance, Stanford opens the Center for Computer Research in Music and Acoustics or CCRMA. Many luminaries in the field would come to work there, including Max Mathews, Chris Chafe, Ge Wang, Julius O. Smith and John Pierce.

In 1973, Barry Vercoe, who found the MIT Experimental Music Studio (yet another EMS) two years earlier, writes Music 11, a next-generation music synthesis program, named in part for the PDP-11 minicomputer it was designed for. Music 11 was notable for providing separate sampling rates for audio signals and control signals (which did not require as much resolution as audio, and hence made the process more efficient), a distinction maintained in almost all subsequent digital music applications (for example, in current-day MAX, objects with tildes(~) are computed at audio rate, those without are computed at a lower control rate).

IRCAM, the Institut de Recherche et Coordination Acoustique/Musique (Paris) becomes a major center for computer music research and realization and develops 4X computer system, featuring then revolutionary real-time digital signal processing. With Pierre Boulez as director, and Berio named as artistic director, many notables in the field researched and worked there, including John Chowning, Stanley Haynes, Miller Puckette, Jean-Claude Risset, Tristan Murail, Jonathan Harvey and many more. Murial, a leading proponent of the spectralist approach to composition writes Désintégrations for large ensemble and electronic playback in 1982 based on spectral modeling of real instruments. Murail, who taught composition at IRCAM for seven years before moving to Columbia University, follows that a decade later with his second IRCAM commission, resulting in L'Esprit des dunes, another work for ensemble and electronics, but this time, with the electronics coordinating with the ensemble via an interactive MAX patch controlled by a keyboard.

In 1976, Iannis Xenakis established the Centre d'Etudes de Mathématique et Automatique Musicales or CEMAMu . It is perhaps best known for the development of the UPIC (Unité Polyagogique Informatique CEMAMu) composition system which takes realtime input from a graphics tablet to control digital musical parameters. UPIC was used by Xenakis to produce Mycènes Alpha in 1978 and several other works. Xenakis established a mirror facility at Indiana University, the Center for Mathematical and Automated Music (CMAM), now CECM, from 1966-1972 after which he left IU.

In 1977, MIT Press begins publishing the Computer Music Journal, complete with sound discs to accompany the peer-reviewed articles on the latest research and creative activity in the field.

Paul Lanky, of Princeton University, composes Six Fantasies on a Poem by Thomas Campion in 1978-9 using an IBM 360 mainframe computer and a math process he applied to music cross-synthesis called Linear Predictive Coding or LPC. For the work, he takes the spoken voice of his spouse and transforms the aspects of speech into harmonized textures based on the contours, vowels, articulations, etc. of the text. Lansky went on to write several digital signal processing software packages including Cmix, MIX and RT, which are carried on today in the open-source RTcmix software package developed in the mid-1990's by Brad Garton, David Topper and subsequently several others such as CECM director John Gibson and former CECM student, Doug Scott.

Also in 1979, the International Computer Music Association (ICMA) is founded and is still one of the primary worldwide organizations for the field, holding annual conferences called International Computer Music Conference(s) or ICMC.

1980's

Pierre Boulez's Repons (1981) for 24 musicians and 6 soloists uses the IRCAM 4X (above) to transform and route soloists to loudspeaker system. MIDI instruments and software make powerful control of sophisticated instruments easily affordable by many studios and individuals. Acoustic sounds are reintegrated into studios via sampling and sampled-ROM-based instruments. Miller Puckette (pictured left) develops graphic signal-processing software for 4X called MAX (after Max Mathews), later ports it to Macintosh (with Dave Zicarelli extending it for Opcode) for real-time MIDI control, bringing algorithmic composition availability to most composers with modest computer programming background. Yamaha introduces DX-7 MIDI keyboard, based on FM synthesis algorithms developed by John Chowning at Stanford University.

MIDI Specification 1.0 published in 1985 by the MIDI Manufacturers Association. Also in 1985, Digidesign releases Sound Designer software for the Macintosh, the first consumer-level hard-disk recording and editing software. David Jaffe, Julius Smith and Perry Cook (CCRMA studios of Stanford University) prototype physical modeling, a method of synthesis in which physical properties of existing instruments and represented as computer algorithms which can then be manipulated and extended. Barry Truax composes Riverrun in 1986, using new granular synthesis techniques controlled in realtime using a PDP mini-computer controlling a DMX-1000 DSP. Kyma, a graphics-based sound-synthesis and DSP environment created by Carla Scaletti in 1986, is released for the proprietary Platypus DSP, and still going strong in 2020 with new DSP boxes traditionally named after large South American rodents.

In 1982, Jonty Harrison established the BEAST, the Birmingham ElectroAcoustic Sound Theatre at the University of Birmingham, England, which focused on acousmatic music especially designed for live active loudspeaker diffusion. The facility houses a concert hall with a large number of speakers, perhaps up to 100, of various sizes, heights, and even directions, with some facing away from the audience. Acousmatic music continues to gain in popularity, particularly in Europe and Canada.

In 1984, Barry Schraeder, along with Jon Appleton and several others form the Society for Electro-acoustic Music in the US or SEAMUS, which is still one of the several premiere organizations in the field, and holding national annual conferences as well as disseminating a series of recordings, now near 30 CD's, called Music from SEAMUS. In 1989, the Center for New Music and Audio Technologies (CNMAT) is founded at UC Berkeley. In the late 1990's at CNMAT, Adrian Freed and Matt Wright would develop the Open Sound Control (OSC) network protocol to transport music-parameter data across networks and between devices.

IN 1985, MIT opened its Media Lab with Barry Vercoe as a founding member and leader of the Music, Mind, and Machine group . Tod Machover also joined the Media Lab faculty that same year after serving as IRCAM's Director of Musical Research since 1980. Princeton was collaborating more and more with Bell Labs, and by the mid-1980's had developed its own facilities with English-born Godfrey Winham and Paul Lansky. Also, in 1985, Barry Vercoe updated his Music 11 program to Csound , which is still widely used today, and continued the lineage of the Music-N family of languages (though minus the '-N' nomenclature).

Also, in 1989, the Steve Jobs-designed NeXT computer was released with the Music Kit (changed later to Sound Kit) software built into the OS. Written by David A. Jaffe and Julius O. Smith, it supported the onboard Motorola 56001 DSP chip. Around the same time, IRCAM also incorporated their live-processing software expertise into the IRCAM Signal Processing Workstation (ISPW), up to three large 2-processor expansion boards for the NeXT computer running their Faster Then Sound (FTS) server and a version of MAX, also with multichannel recording and playback. At around $12K at the time (about $25K now), it was not cost-effective for most smaller studios. The FTS/MAX software was later ported, without the need for the DSP boards to SGI and other platforms.

1990's

Interactive computer-assisted performance becomes popular. Tod Machover's (MIT, IRCAM) Begin Again Again for "hypercello," an interactive system of sensors measuring physical movements of cellist premiered by Yo-Yo Ma. Max Mathews perfects Radio Baton to compliment his Conductor program for real-time tempo,dynamic and timbre control of a pre-input electronic score. Morton Subotnik releases multimedia CD-ROM All My Hummingbirds Have Alibis (pictured left). MIDI sequencing programs expand to included digital audio. Large number of works for instrumentalist (or ensemble) and tape composed, such as James Mobberley's Caution to the Winds for piano and tape, pioneered by Mario Davidovsky's Synchronisms series several decades earlier.

Opcode releases a consumer version of MAX software in 1990, characterized by connecting graphic objects (similar to the IRCAM software objects) on the screen via mouse-routed patch cords. Opcode's MAX was written by Dave Zicarelli, and at first manipulated MIDI data only. Zicarreli reacquired the rights and released it through its current company, Cycling'74 in 1997, around the same time Miller Puckette released Pure Data (Pd), a similar interface, and both could manipulate audio data as well, MAX being renamed MAX/MSP (for MAX Signal Processing). Both are still going strong as of this writing in 2019, with MAX having added a visual creation element originally called Jitter (now the whole package (data, audio, visuals) is just called MAX).

In the mid-90's, James McCartney creates the SuperCollider realtime synthesis programming language, a descendant of the Music-N-style languages (such as Music V) which is open source and free to download. SuperCollider is extremely powerful, does require some study and programming skills, and is frequently used for live coding, where composer/performers are typing to guide a composition as it is in process.

2000's

A new interest in expressive non-traditional real-time controllers developed, exemplified by the establishment of NIME (New Interfaces in Musical Expression), which held its first conference in Seattle in 2001. The interest was enhanced by the release of the inexpensive Italian-designed Arduino ATmega microprocessor board in 2005. Subsequent models are still going strong for both live performance and installations. The Arduino allowed for physical, optical and environmental controller input to be digitized and used by applications, including the new Processing language, MAX, SuperCollider and Kyma. This in turn led to a new branch in the field called data-driven instrument design and composition, practiced today by composers such as Jeff Stolet and Chi Wang. Enhancing this trend, in 2004, the Lemur multi-touch control surface was developed and in 2008, TouchOSC was released for iPhone, adding graphic, wireless touch control of CNMAT's Open Sound Control to manipulate a wide variety of software.

Around 2003-2005, laptop orchestras, such as PLOrk (Princeton Laptop Orchestra), developed by Perry Cook and Dan Truman, and the SLOrk (Standford Laptop Orchestra), developed by Ge Wang, were established as a modern version of earlier electronic ensembles, such as Theremin and Ondes-Martenot ensembles of the prior century. Two year prior, ChucK, an audio programming language written by Perry Cook and Ge Wang geared towards LORC's and live coding, was released. Also, in 2003, the Sonic Arts Research Centre (SARC) is established in Belfast. One of it main focuses was 3D audio spatialization, diffused in its Sonic Lab, with Gary Kendall as principal researcher.

2010's

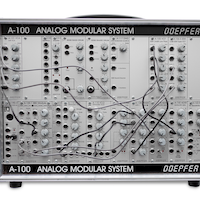

Even in electronic music, everything that goes around eventually comes around. Following renewed interest by rock project studios returning to reel-to-reel tape in the 1990's, and a return to music released on vinyl, the field of electronic music synthesis has returned to modular synthesis, complete with patch cords with the Eurorack format modular instruments. Though Dieter Dopfer codified the physical format in Germany in 1996 (pictured, his A-100 synth left), it has certainly gained popularity in the last decade, with thousands of individual modules for sale by hundreds of designers, and users pick and choose to build their own instruments. Some of these modules are purely analog, but many are hybrid analog/digital, and are driven by both MIDI keyboards and computers.

In 2013, Virginia Tech ICAT studios opens their Cube, a high-density loudspeaker array (HDLA) under the direction of Eric Lyon, Charles Nichols and Ico Bukvic. This built upon theatre-based immersive audio work done by Stephen Beck several years earlier at Louisiana State University. The Cube speaker dome or acousmonium contained 150 speakers with computer-controlled routing to create complex 3D audio environments and sound directions. It also supported 3D wavefield synthesis, high-order ambisonics and video projection.

2020's

WE'RE HERE! WE MADE IT!

Recommended Historical Reading

Chadabe, Joel: Electric Sound

Dean, Roger, Ed.: The Oxford Handbook of Computer Music

Russcol, Herbert: The Liberation of Sound

Appleton, Jon and Perera, Ronald, Ed.: The Development and Practice of Electronic Music

Holmes, Thom: Electronic and Experimental Music: Technology, Music, and Culture