Chapter One: An Acoustics Primer

12. What is reverberation (reverb)? | Page 2

Artificial Reverb

Artificial reverb has been around for a long time. In addition to the engineer's ability to control the balance of direct and reflected, reverberated sound by adjusting the distance of microphones to a source, both electronic and physical approaches to adding reverb to live or recorded sources began to appear in the 1920's. The famed Hammond Organ company developed a spring reverb for their organs, but also sold them to companies such as Fender, who created their iconic Twin Reverb amp in the 1960's. Who can forget the jangling sound of a rock star guitarist kicking their Fender amp to set off the springs. Reverb or echo chambers were set up in highly reflective rooms, with a speaker and microphone, and perhaps some baffles. A percentage of the signal could be fed back from the microphone into the speaker to increase decay times, and the distance of the speaker to the microphone controlled the amount of room ambiance. EMT developed a physically huge, 600 lb. plate reverb in the late 1950's, where a box with a thin metal sheet or plate, a transducer and several pickups were located. The transducer caused the plate to vibrate, though lower frequencies suffered somewhat, as they traveled more slowly through the plate. Indiana University had two, and what I took away from my experience with them was their ability to control reverb time by a motor that tightened or loosened the plate tensioning. Abbey Road Studios had four EMT 140's, in addition to several echo chambers mentioned above.

In 1962, Manfred Schroeder, working at Bell Labs, wrote a seminal paper introducing an algorithm for creating "artificial reverberation which is indistinguishable from the natural reverberation of real rooms" by electronic means. The Schroeder reverberator algorithm consisted of a feedback network of time-delayed allpass filters, and a second algorithm for lowpass-feedback-comb and allpass filters for more sound coloration. This basic concept was the perfect vehicle for artificial digital reverb coding for many years, being implemented commercially in many variations by Lexicon, Alesis and others, as well as in programs such as Csound and MAX/MSP with Freeverb and many more. Schroeder admitted that the major drawback to his original design was that it did not decay exponentially, as natural room reverberation does.

We have mentioned convolution reverb, which is the current industry standard method for a more natural sounding reverb, particularly for the exponential decay portion. Chapter Four: Convolution has a detailed discussion of the mechanisms for implementation as well as some sound examples. But the short version here is that an environment's impulse response (IR) is taken as described on the previous page, and then combined with either live sound or an audio file using this method. A simple description of convolution: the time-variant frequency spectrum of the impulse response is multiplied by the spectrum of the live or recorded direct sound. Audio convolution hardware or plug-ins often come with a wide variety of impulse response files, some recorded in famous concert halls and rooms, though even something as simple as a snare shot could be used. Digital Performer's ProVerb convolution reverb displays the active impulse response file and typical parameters.

Manfred R. Schroeder

Manfred Schroeder (1926-2009), physicist and mathematician, built upon the natural reverberation studies done by Wallace C. Sabine and instigated further psychoacoustic studies to determine how best and most efficiently to simulate reverb artificially through electronics and digital algorithms. The studies also led to the Schroeder frequency, a pitch above which room resonances in reverb approach statistical noise and need not be exactly simulated. You may also recognize the results of his study for improving sound diffusion issues in rooms when you see a wall treatment called a Schroeder diffuser (technically called a Quadratic-Residue Diffuser or QRD), one version of which is pictured on the right, hanging in many major recording studios.

Almost all studios have either hardware or software reverb units. The parameter controls include reverb time (how long it will take the reflections to completely die away), pre-delay time (how long after the direct sound will the first early reflection arrive), filters that determine which frequencies are reverberated longer or shorter (or not at all), and diffusion, damping and color, defined below. Many reverb units also have separate filters and compressors that allow the user to tune the acoustic characteristics of the imaginary environment to avoid troublesome resonances.

A Few Definitions for Reverb Controls

A more detailed discussion of DSP-related audio plug-ins will appear later, but here is a good place to discuss some of the more esoteric controls you are likely to encounter with artificial reverbs.

Diffusion, often a setting on reverb units, refers to the phase coherence of sound reflecting off a surface. For a perfectly reflective surface, sound will reflect with one coherent phase. However, less perfect surfaces (or deliberately imperfect surfaces, such as acoustic diffuser treatments) will return the incident wave to the listener with multiple reflections out of phase with each other, much as a prism or a frosted light bulb would break light into component parts (think light diffuser). The acoustic result for the composer is that a higher setting for reverb diffusion shortens the space between the early reflections and creates a denser sound early on, with more of the comb effect mentioned above. With diffusion set to zero, early reflections may sound discreet and separate, more like echoes depending on their spacing and amplitude settings.

Damping, another parameter often found on reverb units, controls higher frequencies spreading and dying out more quickly than lower frequencies, something we use as an aural cue for the size of a space in addition to the first return of reflections. Damping also helps the user dial in the desired impression of room materials, such as curtains and carpets, which absorb more high frequency reflections than low. A concert hall or football field will have a higher degree of damping than a small studio with hard, reflective walls.

Color will regenerate some of the initial reflection, where room modes or "color" are most discernible, into the late reverb, extending the initial room resonance.

Length parameters for convolution reverbs will allow the user to "stretch" or "shrink" the IR file to assist in avoiding troublesome resonance modes, particularly in the lower range, by effectively changing the size of the room to a small degree while still maintaining the basic character of the room.

For further explanations of common reverb controls, as well as tips on how to effectively use them, see Craig Anderton's excellent article on the subject.

Echoes vs. Reverb

Reflections from surfaces that stand out from normal reverberation levels are called echoes. An echo of prominent amplitude, close in time to the original sound may be referred to as a slapback echo. Concert halls with a focusing flat back stage wall may produce slapback echoes with sharp loud sounds, such as percussion. For reverb effects, the echoes will continue to build up and overlap to a point that they are no longer perceivable as individual echoes. One could conceivably accomplish this up to a certain point with a feedback control on echo plug-ins, but they normally lack the other reverb controls and qualities.

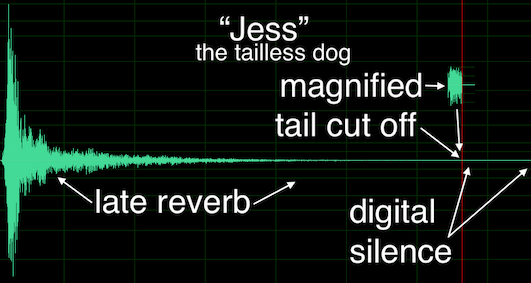

REVERB MIXING TIP: DON'T CUT OFF YOUR TAIL!

It is quite common in audio editing, when monitoring at lower volumes, to prematurely cut off the last part of reverb tail at the end of the audio file, leading to an unpleasant and unnatural denouement, so don't be that person. If you play the audio file below, its waveform to the left looks like the reverb tail has fully ended, but if you listen closely, you'll hear it hasn't. At concert hall volumes, this becomes painfully apparent to the listeners. You can usually magnify the waveform in your audio editor to see where the reverb tail fully ends—and of course, listen to the tail at a higher volume.

PLAY EXAMPLE

Active Acoustics

Active acoustics, which Schroeder discusses in his artificial reverb paper cited above, are electronic systems that responsively alter the acoustic properties of a room or concert hall in real time. Several different approaches exist, but all use some form of multichannel overhead mic'ing and overhead playback into the hall, in most cases to extend reverberation time or to color it. Some systems mic only the stage signal, while others also mic the hall itself, including the regenerated signals being placed back into the environment, usually from the hall's boundary walls. This runs some risk of ringing or feedback, so hybrid systems that combine both are also available. A control panel or software with acoustic presets and the ability to create custom ones provide the hall engineer with great flexibility. There are some that appreciate the expanded acoustic possibilities without the need to make expensive physical changes to the hall, and there are some that find the results unconvincing. I was shocked to learn Phillips put an active acoustics system into Milan's famed La Scala hall in 1955!

ANECHOIC CHAMBERS

Special chambers for acoustic research, and for the recording of special sound examples and testing equipment noise levels, are called anechoic chambers (an=no, echoic=echoes), which should have reverb times approaching zero. The image is of Microsoft's anechoic facility in Redmond, Washington which currently holds the Guinness World Record for the quietest room in the world, a whopping -20.35 dBA, well below the threshold of human hearing and probably quiet enough to hear Bill Gates' thoughts.