Chapter Four: Synthesis

14. A Digital Synthesis Language Sampler | Page 14

Kyma

Prepared by Chi Wang

Associate Director, Indiana University Center for Electronic and Computer Music

Kyma is a powerful sound creation environment that provides flexible object-oriented programming software and Audio Processing Unit hardware acceleration. In 1986, Carla Scaletti developed and released the first version of Kyma and in 1987 included the Platypus signal processor as a computational accelerator. The system is designed primarily for musicians, sound designers, and data researchers of various fields to perform sound synthesis, music composition, real-time musical performance, digital audio processing, and data sonification. Together with Kurt Hebel, they founded the Symbolic Sound Corporation in 1989. The Kyma software is based on Smalltalk and is an object-oriented domain-specific programming language that runs on both Mac and Windows platforms. The external hardware accelerator device is dedicated exclusively for computational tasks related to sound computation and realizing sound. As of this writing, one current APU model, part of a series named after South American rodents, is the pacarana, pictured below.

Two central features of Kyma are its modularity and the extent to which it is data-driven.

Modularity

The notion of modularity revolves around the capacity of a system to form more complex structures by connecting and combining simpler elements. Kyma, like many “modular” software or hardware music environments, also employs modularity. However, the depth to which this modularity shapes the sound creation is, indeed, striking. Inspired by Pierre Schaeffer’s objet sonore, Kyma’s Sound objects, that are primarily represented as on-screen icons and which execute basic tasks like generating, modifying, and combining of audio and audio control data, provide the elemental components that are connected to create signal flow networks that produce and modify digital representations of sound. Kyma calls a single Sound object or a network of connected Sound objects a Sound.

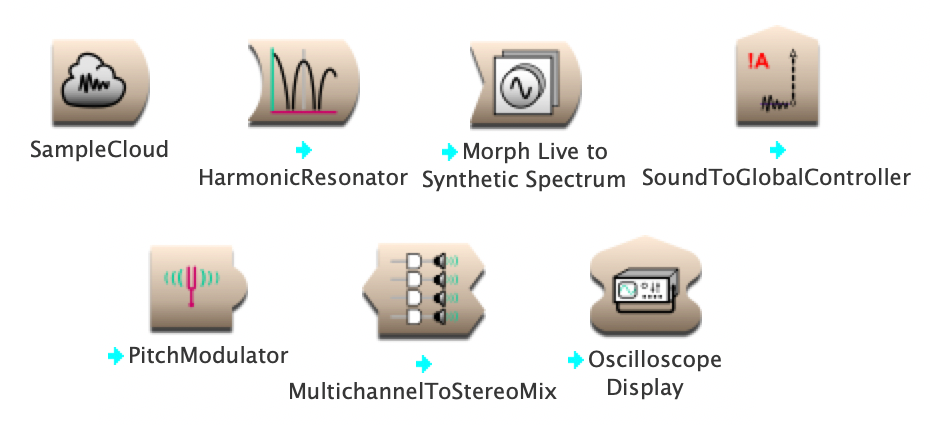

Sound objects are represented as small icons in different shapes (Fig 1). A user can listen to each Sound object by using the “compile, load, start” command. Kyma provides hundreds of such Sound objects that can be flexibly connected to each other.

Fig. 1

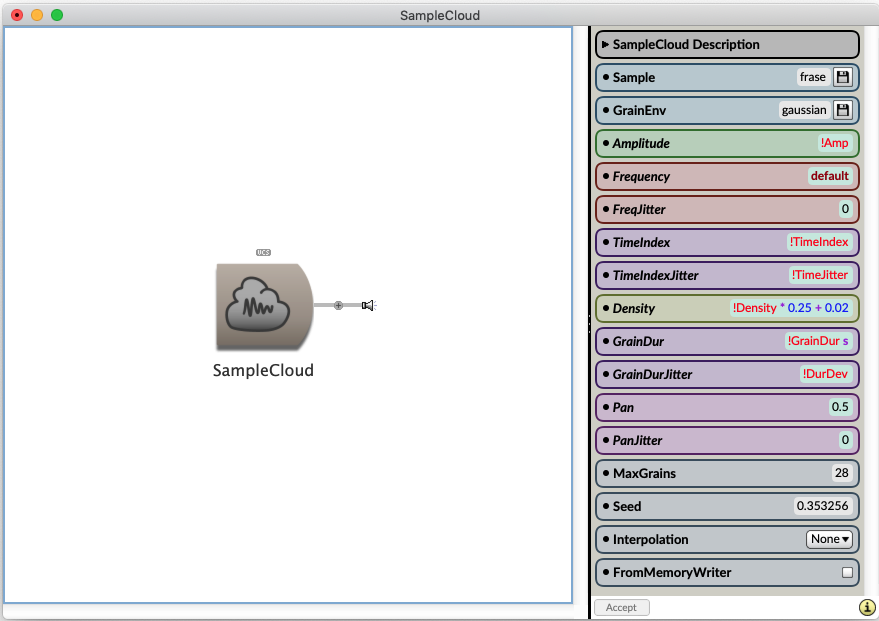

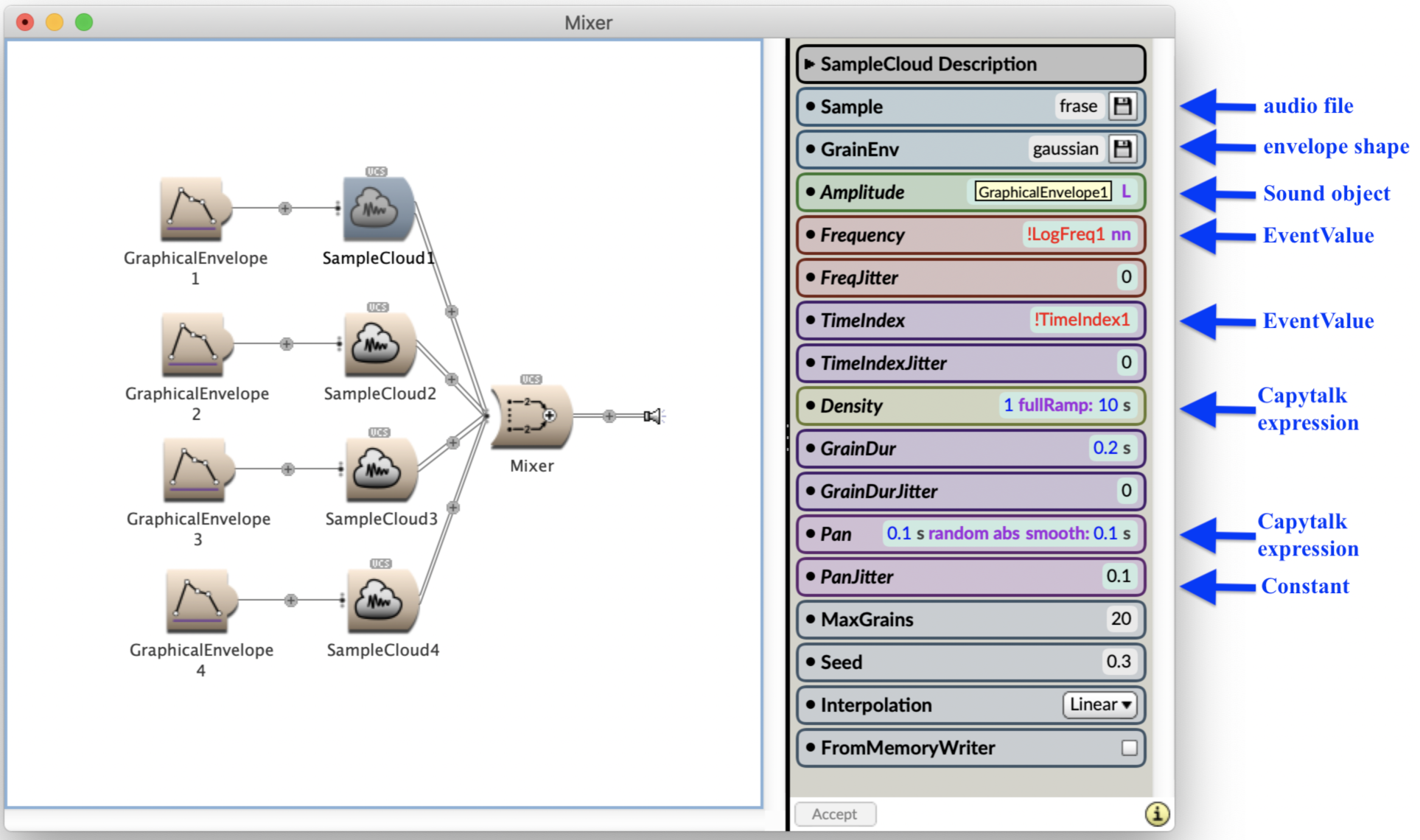

A user can find individual Sound objects, or Sound objects that have been combined to provide a specific or useful function, from the System Prototypes strip. Double-clicking on a Sound object reveals the Sound Editor window where the signal flow network to which the Sound object is connected and the parameters that are controlling its behaviors are shown. For example, in the SampleCloud Sound object (Fig. 2) the signal flow is on the left and the Sound object’s parameter fields are on the right. Because the SampleCloud is similar to the granulation of sampled sound, the parameters offered to the user for direct and comprehensive control include Sample (audio to be granulated), Grain Envelope, Amplitude, Frequency, TimeIndex (a continuum of values where each value points to a specific position in the audio), Density (total density of cloud), Grain Duration, and Pan. The capacity to randomize the values contained in the Frequency, TimeIndex, Grain Duration, and Pan parameter fields is also provided.

Fig. 2

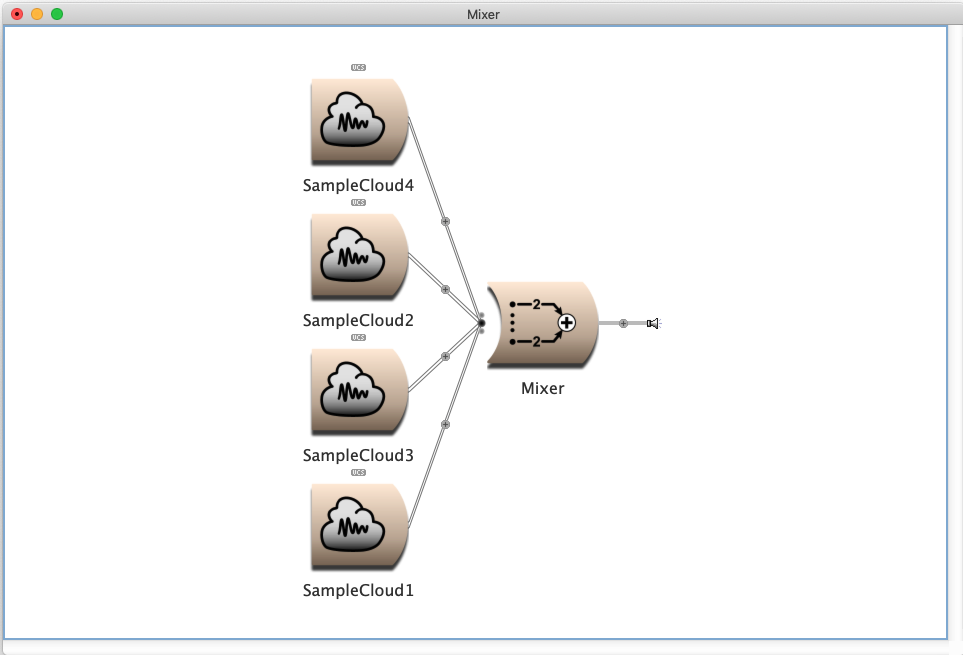

The signal flow graph (positioned on the left) is where Sound objects can be connected or combined. Creating a signal flow is achieved by dragging and dropping or coping and pasting. For example, if the objective was to granulate four audio samples with the SampleCloud (controlling each Sound object individually), and to combine the output of the four clouds together in a Mixer, the signal flow would result (Fig. 3).

Fig. 3

To control musical parameters in realtime, Kyma relies on Capytalk, a functional reactive programming language which is also highly modular. For instance, with Capytalk, a user can combine two chunks of code such “1 ramp0: 2 s” and “((0.2 s random abs) * 0.1)” with simple arithmetic operations like addition, subtraction, multiplication or division. The use of Smalltalk entered directly into Sound objects supplements and amplifies the considerable power of the modular architecture.

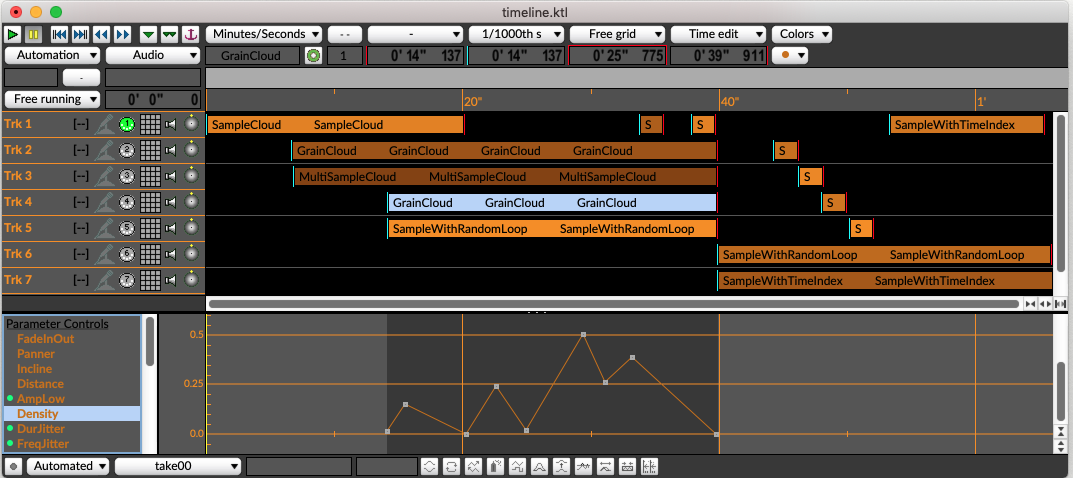

Modularity in Kyma is extended to the macro levels of musical structure with the Timeline. The Kyma Timeline is the environment Kyma Sounds, often constructed as a network of individual Sound objects, are scheduled, combined, controlled, and spatialized. When organizing a musical composition or a live performance, the Timeline and the MultiGrid are excellent environments to manage sound and music at the macro level. The Timeline environment is shown in Fig. 4.

Fig. 4

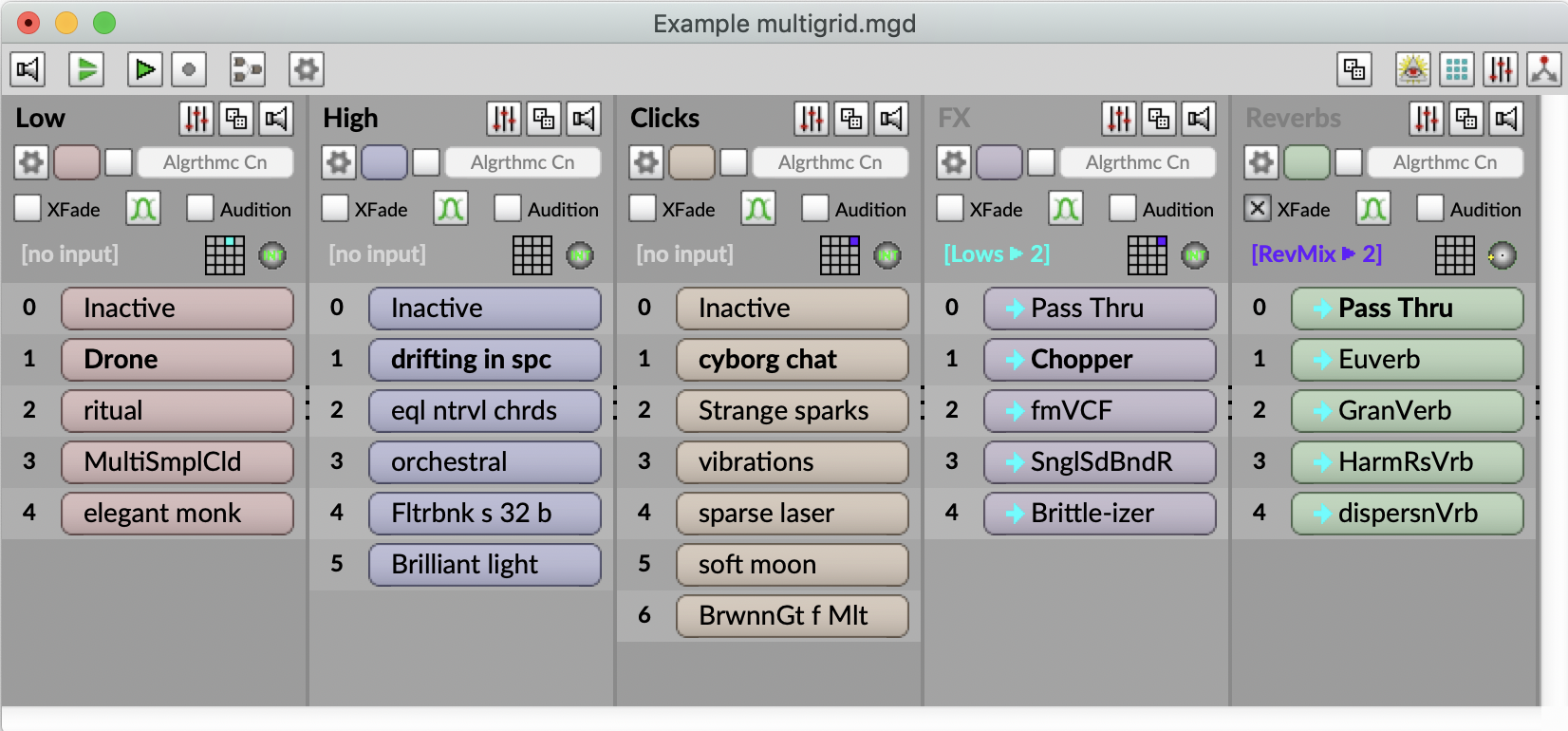

MultiGrid is another example of the macro level environment for modules. Visually, you may think of the MultiGrid is a verticalized Timeline – a map of all possible sequences and combinations of the Sounds. It is the environment where Kyma Sounds can be instantaneously and arbitrarily selected to be heard both individually and in combination during a real-time performance.

Fig. 5

Data-driven

Kyma not only contains powerful engines to generate control data internally, but its boundaries are vastly open to received data in many forms and from many sources. Data can be received by Kyma through external live audio input, MIDI connections, hardware connections with other software, and from virtual control surfaces. Kyma can also generate its own control data with its own audio analysis tools (e.g., with the Sample File, Time Alignment Utility, and Spectral Analysis Editors) and with its extensive library of Capytalk expressions as well as with the full power of Smalltalk.

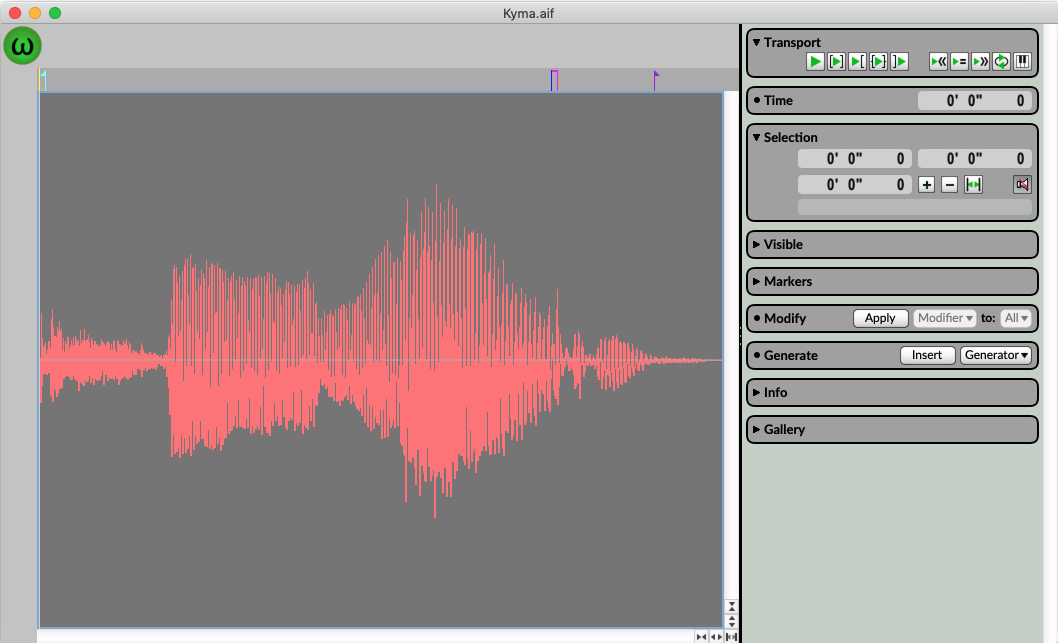

Data that can be used to represent and control sound-producing algorithms can be conveniently visualized and edited in Kyma with its Sample File, Time Alignment Utility, and Spectral Analysis editors. Specifically Kyma provides a Sample Editor. The Sample Editor not only executes all the typical Fadein, Fadeout, and Reversal types of audio file transformations, but also generates custom wave shapes of any duration, extracts unique wave shapes from existing audio files, import csv files, and many other ways to import data. The Sound Editor is shown in Fig. 6.

Fig. 6 Sound Editor

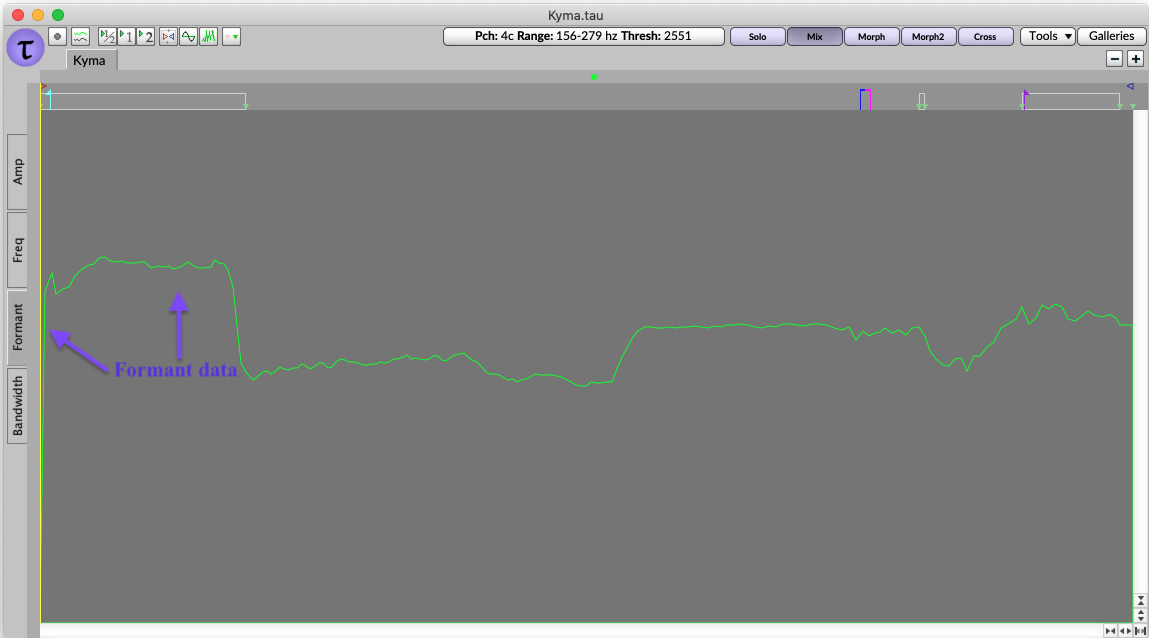

Also embedded in Kyma is the Time Alignment Utility or TAU, a proprietary resynthesis tool "where temporally coordinated amplitude, frequency, formant, and bandwidth envelopes can be mixed or morphed between and among to yield stunning timbral nuance." The TAU Editor is shown in Fig. 7.

Fig. 7 Time Alignment Utility

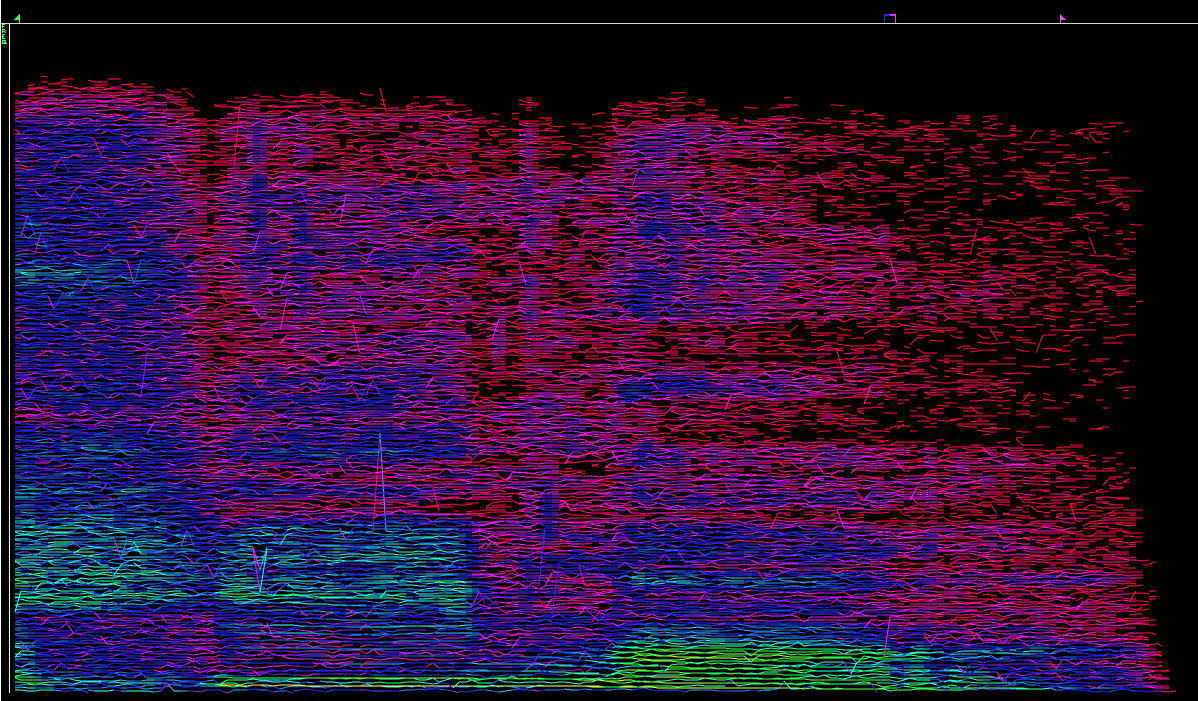

Kyma, in the form of its Spectrum Editor, also houses a power environment to view and modify the frequency and amplitude values of sine wave components in files over time. The Spectrum Editor is shown in Fig. 8.

Fig. 8 Spectrum Editor

These three editors are supplemented by real-time visualizations provided by the Oscilloscope and Spectrum Analyzer.

Constant or dynamically changing numerical values can be entered directly into a Sound object’s parameter fields to shape its output. Kyma provides multiple ways to enter these numerical values: (1) a fixed constant number such as 4, 72, or 3.478735; (2) a numerical value resulting from an arithmetic operation like addition, subtraction, multiplication or division; (3) a Capytalk expression, such as 0.1 s random, which produces a dynamically changing stream of values; (4) a so called EventValue, which provides the user with a virtual fader, dial, or button. Additionally, another Kyma Sound object can also specify the numbers operating in a parameter field if it is pasted directly into that parameter field. Examples of each of these is shown in the Sound Editor in Fig. 9 below.

On the left, the signal flow diagram shows four SampleClouds being combined in a Mixer while each is also being controlled an envelope generator. The parameter fields are shown on the right side. In these labeled fields, numerical values are entered - typically in the form of Capytalk - to control the sonic parameters of the selected Sound object. In this figure, we see (1) a Sound object pasted into a parameter field to control the Amplitude, (2) the EventValues !LogFreq and !TimeIndex1 to control the Frequency and Timeindex parameters, respectively, (3) the Capytalk expression 1 - (1 ramp: 10 s) to control the Density (of the cloud), and (4) constant values used in the FreqJitter, TimeIndexJitter, GrainDur, GrainDurJitter, PanJitter, MaxGrains, and Seed. In parameter fields where disk icons are present, Kyma expects a file to be loaded. In the case of the SampleCloud, audio files are loaded to specify what audio is to be granulated (Sample) and what the envelope shape of each grain will be (GrainEnv).

Fig. 9

Real-time synthesis example with Wacom Tablet

In the video example below, I recorded myself speaking the word “Kyma.” I also did spectrum analysis and TAU analysis of the audio file. You can view the waveform, spectrum file, and TAU file in figures 6, 7 and 8. I combined the TAU player, SampleCloud, and SumofSines with pan controls individually into a mixer. In each one of the three Sound objects, I used Wacom Tablet “!PenX” to control the “!TimeIndex”, “!PenY” to control the frequency, “!PenZ” to control the amplitude. The video clip is an example of the real-time synthesis of me using the pen to draw across the tablet surface with different speed, vertical location changes, and pressure changes. The sound you hear is the result from the actions and synthesis algorithm.

click video image below to play/pause

Resources

Symbolic Sound Website. Current Kyma products and software, community and news

Carla Scaletti personal website

Kyma International Sound Symposiums

Kyma Community

Kyma X Tutorials

Kyma 7 Tutorials

Kyma and the SumofSines Disco Club by Jeffrey Stolet

Kyma 7+, ver. 7.38f2

Carla Scaletti and Kurt Hebel